| Audience | Marketers and analysts who want to compare performance across randomized test and control groups. |

| Prerequisites |

|

Experiments help you evaluate how different marketing strategies perform by analyzing outcomes across your Audience Split groups. You can measure lift, compare treatment and holdout results, and understand whether your campaigns drive meaningful changes in user behavior.

Learning objectives

After reading this article, you’ll know how to:

- Identify how Experiments are created and managed

- Set up Audience Splits for measurement

- Navigate to and review all Experiments

- Configure metrics, start dates, and measurement windows

- Interpret lift, confidence intervals, and performance trends

- Normalize results for clearer group comparisons

Overview

Experiments provide a measurement layer for Audience Splits.

Whenever you create an Audience Split in Customer Studio, Hightouch automatically generates a corresponding Experiment so you can track performance across randomized groups.

Experiments allow you to:

- Compare holdout vs. treatment outcomes

- Analyze lift and confidence intervals

- Visualize performance over time

- Evaluate strategies in real time

| Feature | Description |

|---|---|

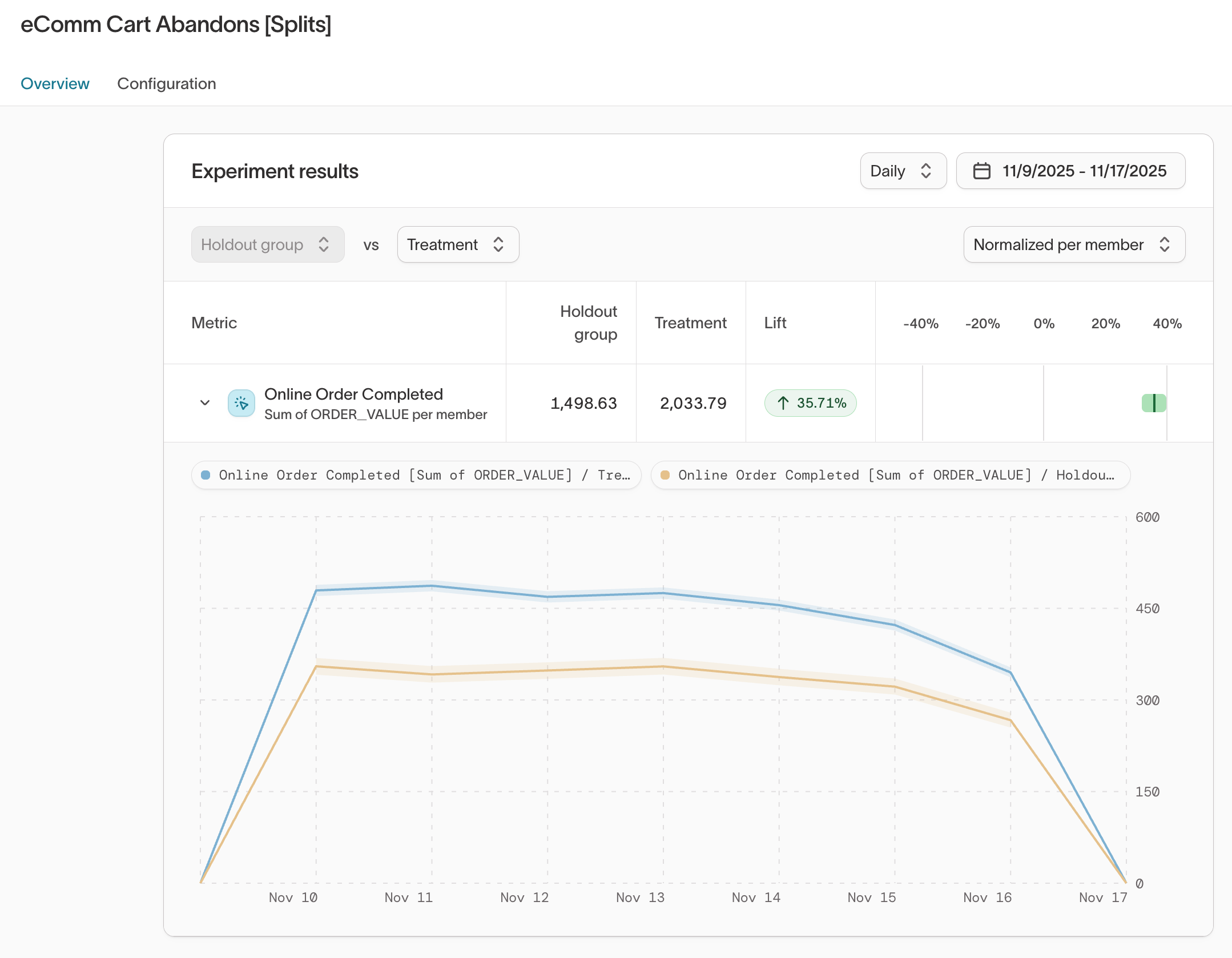

| Experiment results | Displays lift, performance trends, and confidence intervals for your selected metric |

| Configuration | Controls metrics, measurement windows, and start dates used for computing results |

| Normalization | Allows per-member or baseline-scaled comparisons |

Splits measurement charts have been deprecated. All split-based reporting now lives in Intelligence → Experiments.

How Experiments are created

Experiments are automatically managed based on your Audience Splits:

- Creating an Audience Split automatically creates a new Experiment.

- Disabling or deleting an Audience Split automatically removes its Experiment.

- Re-enabling or restoring an Audience Split automatically restores the Experiment.

This ensures measurement stays aligned with the audiences you’re actively using.

Setup and requirements

1. Split your audience

Before measuring experiment results, ensure that:

- You’ve created Audience splits in Customer Studio.

- The audience has synced at least once since splits were created.

- Users have generated measurable events (such as purchases, page views, or clicks).

For every Audience Split created in Customer Studio, Hightouch automatically creates a corresponding Experiment in the Experiments section of Intelligence. If you delete an Audience Split, the corresponding Experiment is removed automatically. Restoring the split restores the Experiment.

Measure results

The Experiments section of Intelligence helps you compare outcomes between audience groups to evaluate the impact of your campaigns.

Experiments are available under Intelligence → Experiments.

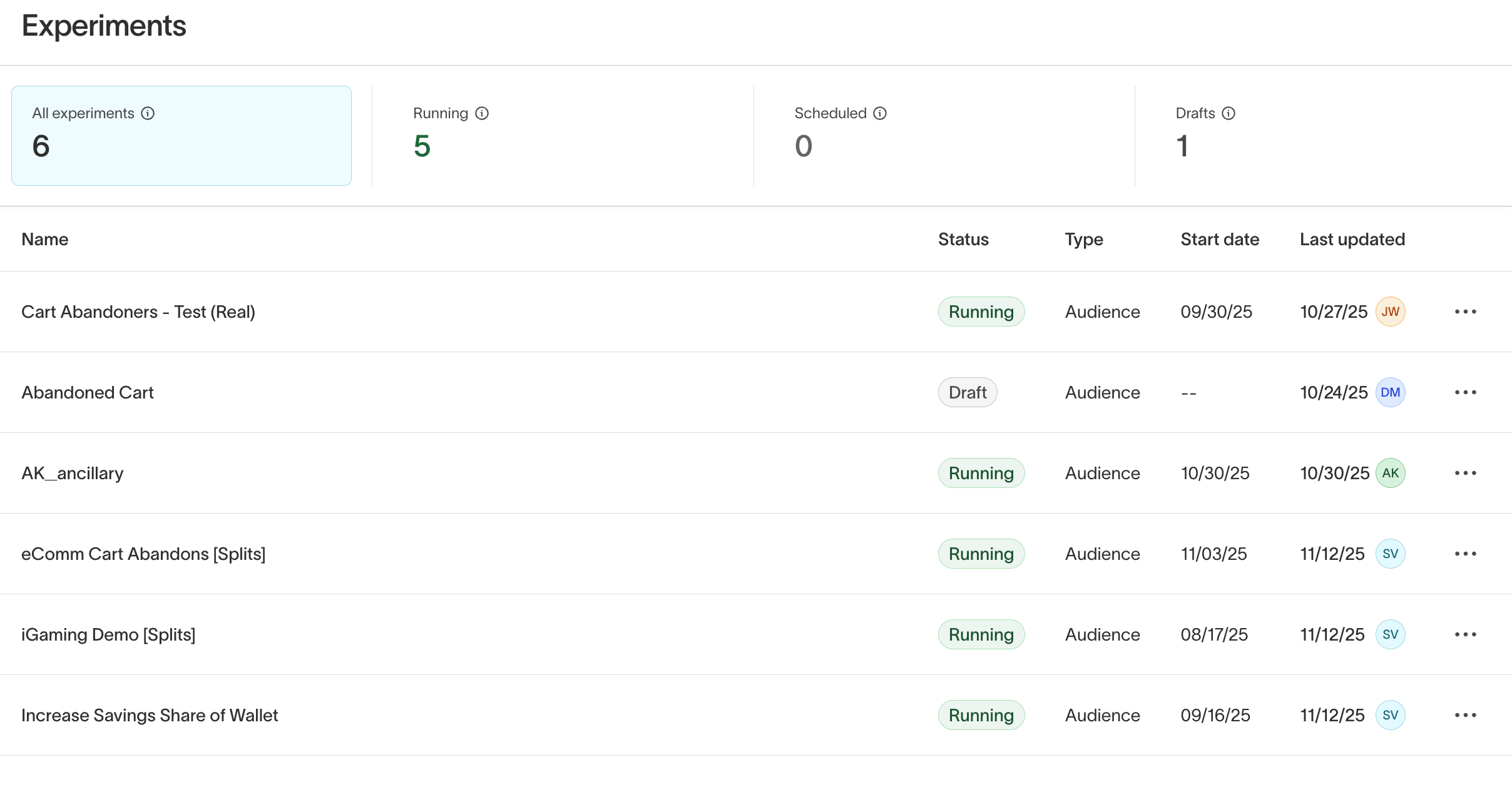

1. View list of Experiments

The Experiments list shows all experiments, their statuses, and recent updates.

Statuses include:

- Draft: Missing one or both required elements for measurement: a primary metric and a start date (see configuration).

- Scheduled: Fully configured; the start date is in the future.

- Running: Fully configured; the start date is today or in the past.

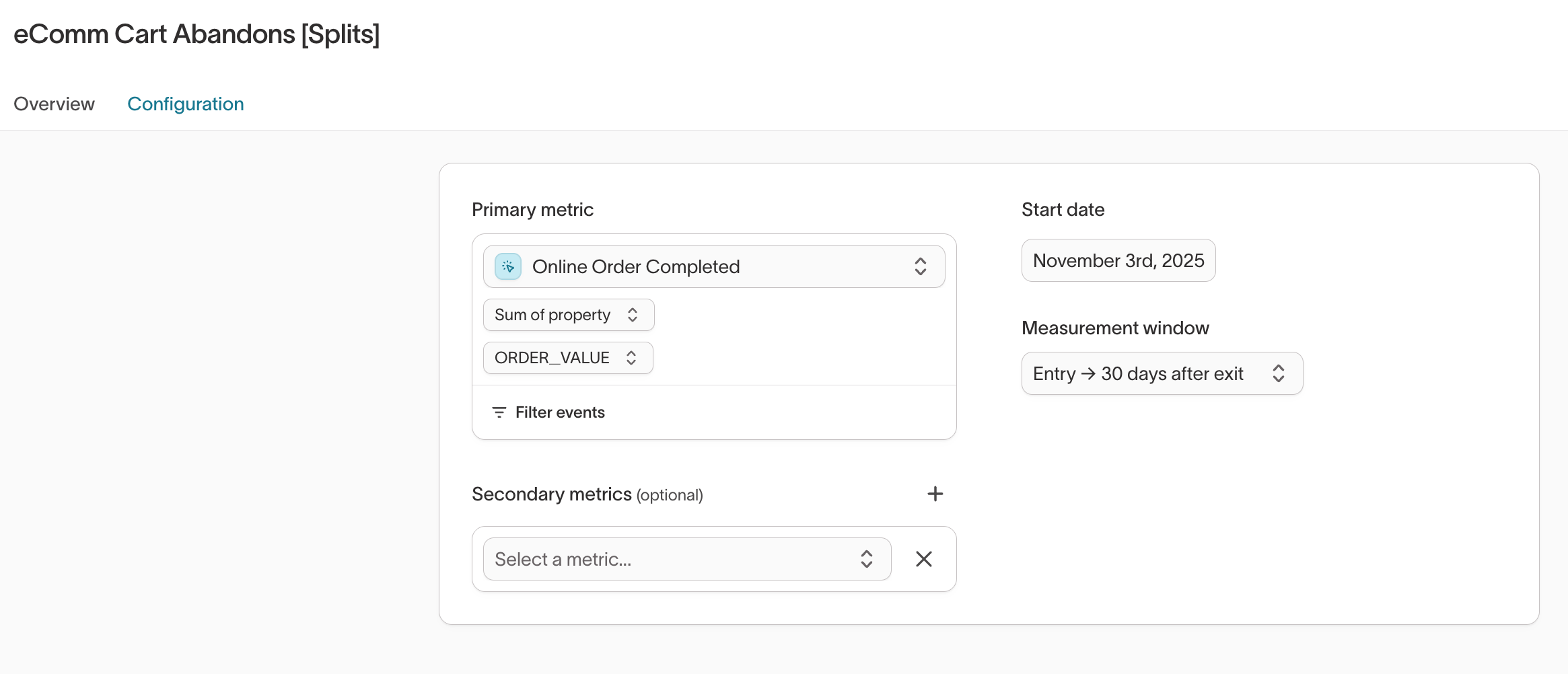

2. Configure an experiment

Open an experiment and select the Configuration tab.

From here, you can:

- Choose a primary metric (required) and optional secondary metrics (e.g., Conversions, Revenue).

- Learn how to create a new metric.

- Optional: Use the

Filter bydropdown to refine by user properties or events.

- Set a Start date

- Determines when measurement begins and does not apply retroactively.

- Does not affect sync or activation behavior.

- Choose a Measurement window

- Example: Entry → 30 days after entry measures events occurring from the moment a user enters the audience through 30 days later.

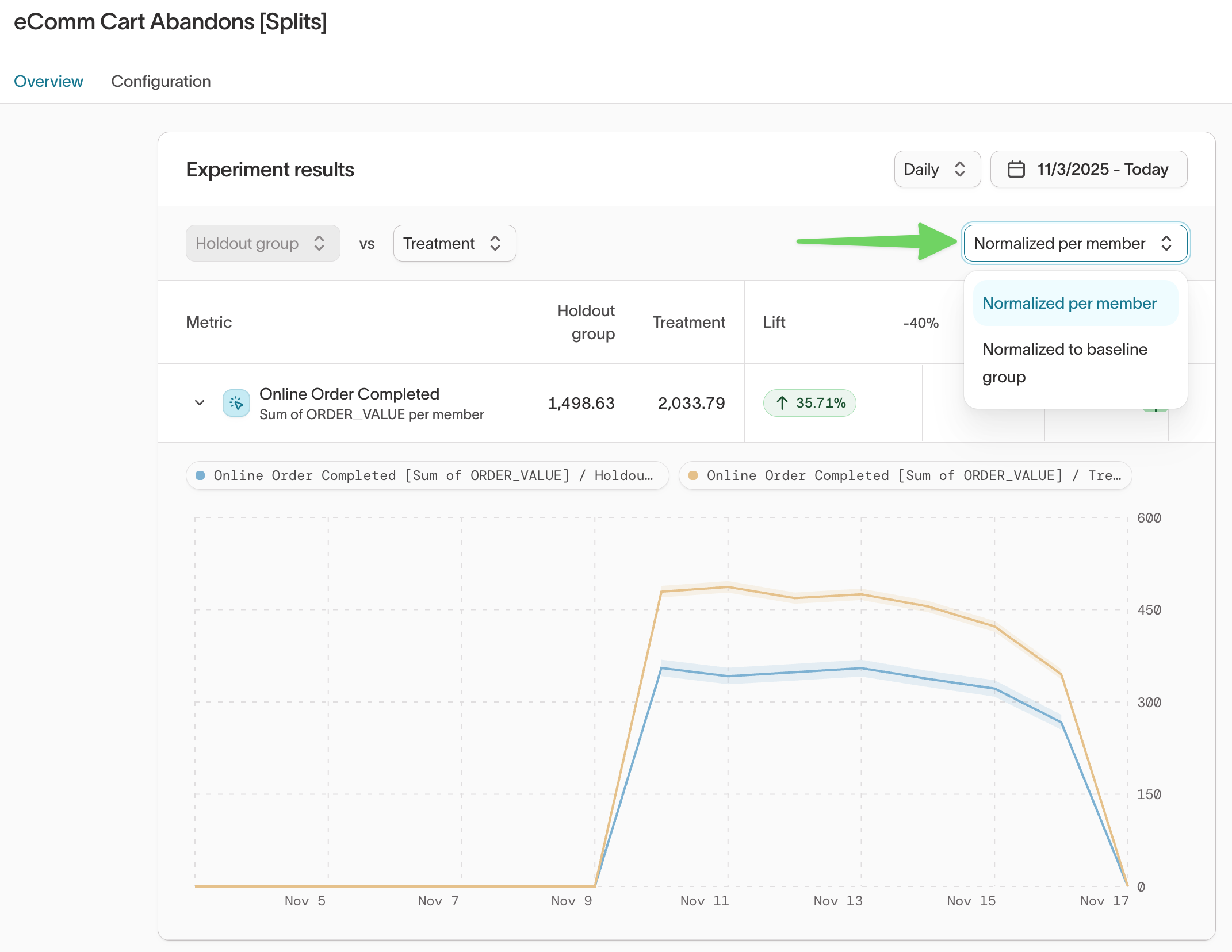

3. Interpret results

The Overview tab displays experiment outcomes and performance trends.

Key elements:

- Lift % (top-center): Percentage difference between treatment and holdout group performance.

- Lift interval bar (top-right):

- Green: Statistically significant positive lift

- Red: Significant negative impact

- Gray: Not statistically significant (interval overlaps 0%)

- Performance lines (main chart):

- Solid lines represent average performance over time

- Shaded regions show the 95% confidence interval

Lift intervals use a Bayesian method, allowing you to monitor results continuously without waiting for an experiment to complete.

4. Normalize results

Use the normalization dropdown to switch perspectives:

- Normalized per member (default): Shows average performance per user

- Normalized to baseline group: Scales results for an even comparison

Hover over the lift card to view raw totals.